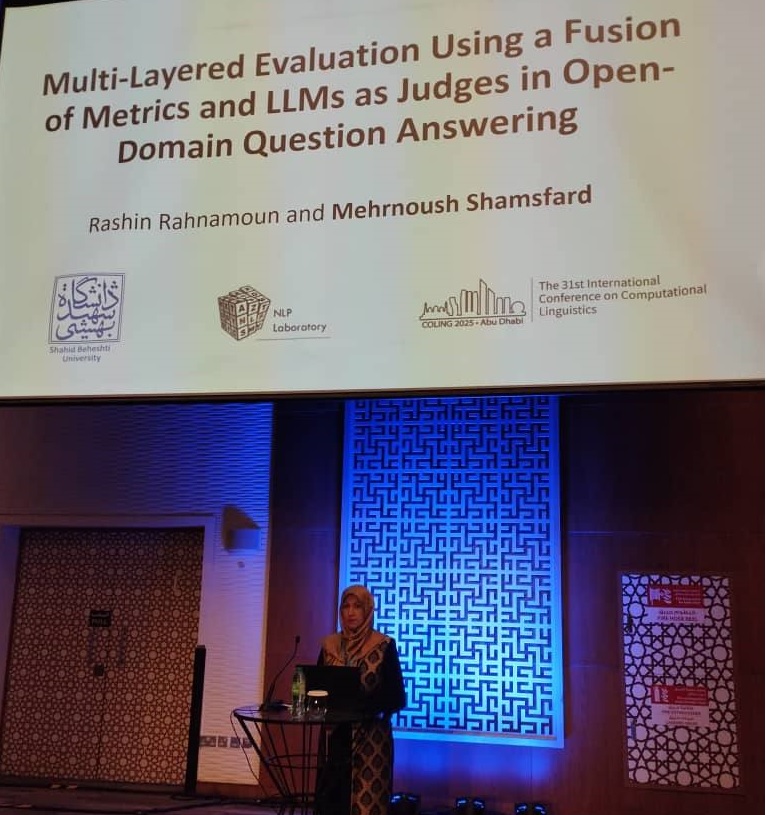

During the COLING 2025 conference, Dr. Mehrnoush Shamsfard and Ms. Rashin Rahnemoon presented a paper titled “Multi-Layered Evaluation Using a Fusion of Metrics and LLMs as Judges in Open-Domain Question Answering”

Abstract:

Automatic evaluation of machine-generated texts, such as answers in open-domain question answering (Open-Domain QA), presents a complex challenge involving cost efficiency, hardware constraints, and high accuracy. Although various metrics exist for comparing machine-generated answers with reference (gold standard) answers, ranging from lexical metrics (eg, exact match) to semantic ones (eg, cosine similarity) and using large language models (LLMs) as judges, none of these approaches achieves perfect performance in terms of accuracy or cost. To address this issue, we propose two approaches to enhance evaluation. First, we summarize long answers and use the shortened versions in the evaluation process, demonstrating that this adjustment significantly improves both lexical matching and semantic-based metrics evaluation results. Second, we introduce a multi-layered evaluation methodology that combines different metrics tailored to various scenarios. This combination of simple metrics delivers performance comparable to LLMs as judges but at lower costs. Moreover, our fused approach, which integrates both lexical and semantic metrics with LLMs through our formula, outperforms previous evaluation solutions.